How can we find(evaluate) A good Agent?

Alright, But How the Hell Can We Find(Evaluate) A good Agent?

What Makes a "Good Agent"? Evaluating Agents in the Era of LLM-Based RAG Systems

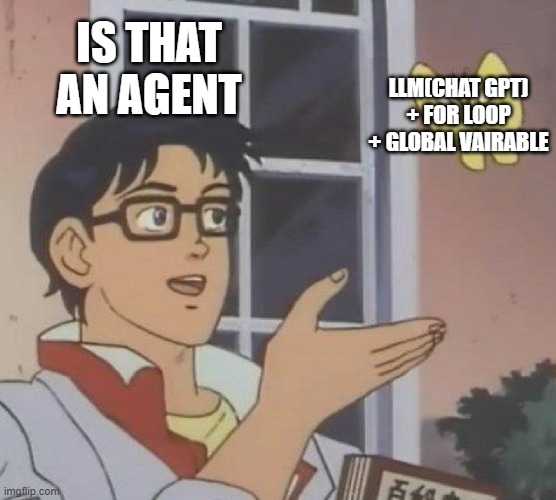

The rise of Large Language Model (LLM)-powered Retrieval-Augmented Generation (RAG) has led to an explosion of projects and services claiming to integrate "agents" into their systems. From task automation to advanced decision-making, these agents are reshaping industries. However, amid the wave of hype, a critical question emerges: What constitutes a good agent? As the AI community navigates this flood of agent-based solutions, it’s imperative to establish robust evaluation methods to differentiate effective agents from underperforming ones. This article explores how to evaluate agents using methods like "G-Eval" and "Hallucination + RAG Evaluation" and why this is critical for the future of agent-based systems.

The Current Challenge: Defining a Good Agent

An agent in the context of LLM-based RAG systems typically performs tasks by combining reasoning, retrieval, and interaction capabilities. However, the effectiveness of these agents varies widely due to:

- Ambiguous Standards: There is no universally agreed-upon metric for evaluating an agent’s performance.

- Complexity of Multi-Step Tasks: Many agents fail to maintain contextual accuracy across multi-turn or complex interactions.

- Hallucinations: Agents often generate factually incorrect or irrelevant responses, undermining trust and utility.

- Domain-Specific Demands: Agents must adapt to the nuances of specific fields, such as healthcare, finance, or Web3.

Without rigorous evaluation frameworks, it’s challenging to identify and improve truly effective agents.